This article describes my experience troubleshooting a massive degradation of Maximo performance after installation of some add-ons and upgrading Maximo. The problem was caused by the fragmentation of SQL Server data file. After running defragmentation, query speed improved several times

Problem

Recently I involved in a project where we need to upgrade Maximo to the latest version and install several big add-ons which include SP, HSE (which is Oil & Gas), and Spatial (which is the same as Utilities). This is the first time I see a system with that many add-ons installed. Maximo is running on SQL Server database in this case.

We configured all Websphere settings and ran the upgrade activities as recommended:

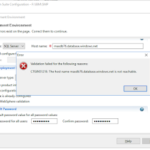

- Run integrity checks before and after the upgrade

- Update statistics

- Rebuilt indexes.

After the upgrade, Maximo still ran a lot slower compared to the before-upgrade version.

Cause

To analyse the problem, I tested and compared the performance of several different queries on the WORKORDER table and the TICKET, some intended to use indexes, some intended to create a full table scan. With the queries that use more indices, the performance gap seems to be smaller (upgraded version is 2-3 times slower); and the one which requires full table scan is significantly slower (10-20 times slower).

With that result, we concluded that the slower performance is due to some sort of IO bottleneck

Solution

We defragmented all tables in Maximo and it restored the system’s performance to the level similar to what we have before the upgrade. Since I’m not super experienced with SQL, I never knew that is something we could do to Maximo tables. With the upgrade and installation of several large add-ons, I think because there are thousands of insert/update statements executed, it caused a significant fragmentation of the data stored on disk and thus resulted in the DB must run a lot more IO operations to retrieve data.

Another interesting thing I learned from this project is that the UPDATEDB process took a lot of time. After tried and implemented several things, the whole process still took more than 5 hours. This is quite problematic because the downtime window is way too long for the business to accept. But if we rebuild the indexes and defragmented the tables before running UPDATEDB, then that process only took 3 hours to complete.