Maximo Specialist Services and Productivity Tools

Maximo doesn’t work? Maximo is too slow? Maximo is a mess? Too much customisation and no one understands it? I can help you with that

95%

Java customisation removal

120%

Customer satisfaction

200%

Improved performance and efficiency

Services

Decustomisation

Too much customisation can slow you down. I have help Australian companies large and small removing and refactoring thousands and thousands of complex Java classes and automation scripts. Once the process is done, your Maximo will be simple, lean, mean and fast.

ERP Integration

Need to synchronise PO, Receipt, and Invoice with ERP? Past effort has failed or does not deliver a satisfactory result? I have done a few dozen ERP integration projects with large and small ERP suites. I can help you with this.

Process Optimisation

End-users complain about system performance? process too clunky? burried in support calls? Those are the symptoms of a system that need optimisation. I have vast experience reviewing Maximo system and processes as a whole to advise on simplifying the system and streamlining the process.

Products

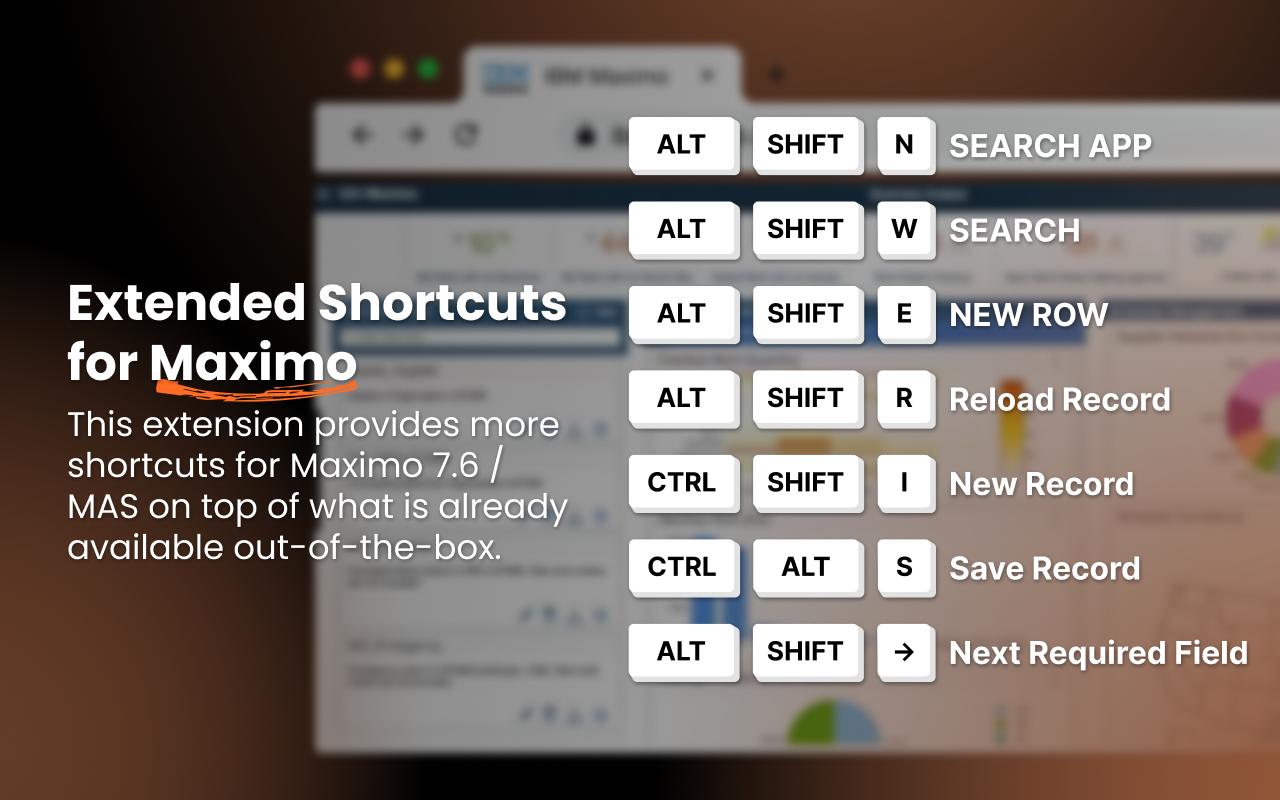

MaxShortcuts

A Chrome extension that provides additional keyboard shortcuts for maximising the speed of navigating Maximo

MaxQA

An automation tool which allows the business analyst to build automated test cases in Excel. Works with Maximo and any other web-based application

MaxSearch

Index unstructured data such as attachments and images and allow the user to conduct a semantic search of relevant records such as work orders or assets by using natural language

About Me

I am a freelance Maximo consultant with deep unstanding of the software and have track records of delivering measurable results to clients in the Utility, Energy, and Transportation industries.

“Viet is the best Maximo consultant I have worked with over my career as an Asset Manager. I’m exceedlingly satisfied with his services. Highly recommend.”

John McAllister

Asset Manager

Want to talk?

If you have a Maximo problem or have an idea and want to see it rolled out in production, we are here to help.