Earlier I provided an example of how to extract and send data as CSV to a user via email. A friend asked me about a requirement he is dealing with. In this case, he has an escalation which sends an email when there is an error with integration. The problem with this approach is if there are many failed transactions, the administrator will receive a lot of emails.

The alternative approach is setting up a scheduled BIRT report which lists all errors in one file. However, this approach also has a problem. On the days when there are no failures, the admin would still receive an email and still have to open the file to see whether there is an error or not.

This is actually a common requirement. Below are some examples:

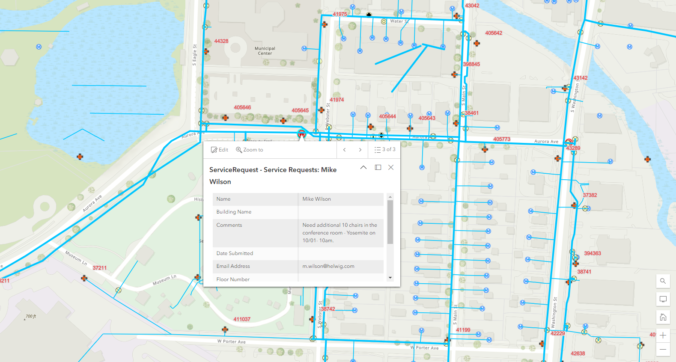

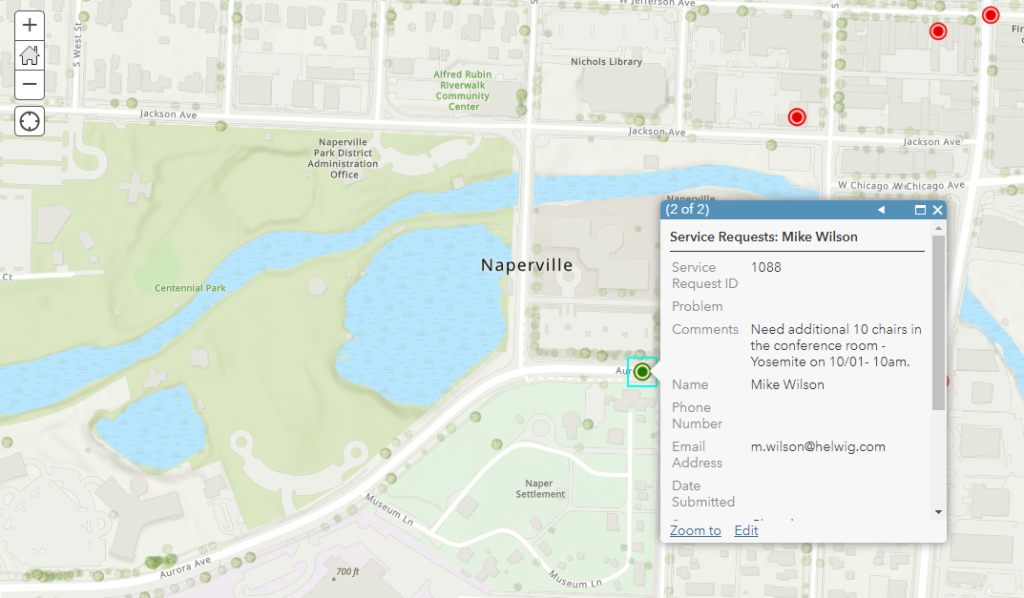

- Operation managers like to monitor a list of critical assets. Maximo should send out a maximum of one email per day with the list of active SR and WOs when the asset is down. Do not send emails if there are no issues.

- Operators like to receive a list of all high-priority work orders reported daily in one email, such as work orders that deal with water quality issues or sewer overflow.

- The system admin wants to get a list of suspicious login activities daily.

- System owners like to monitor data quality issues. Only send out a report if there are issues.

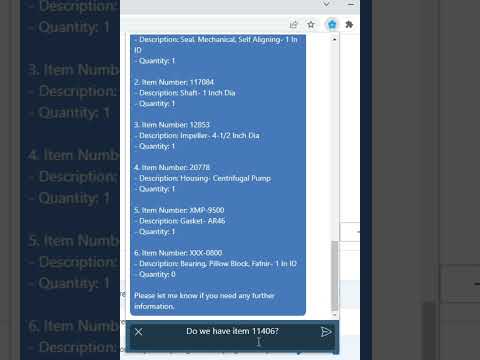

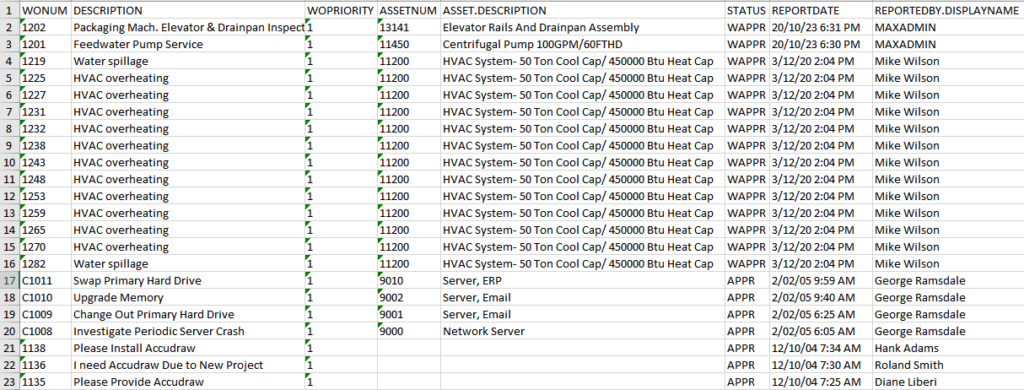

Below is an example of how we extract all P1 work orders in site BEDFORD, and save the data to an Excel file. I didn’t include the code to attach the file and send out an email as it has already been provided in my previous post

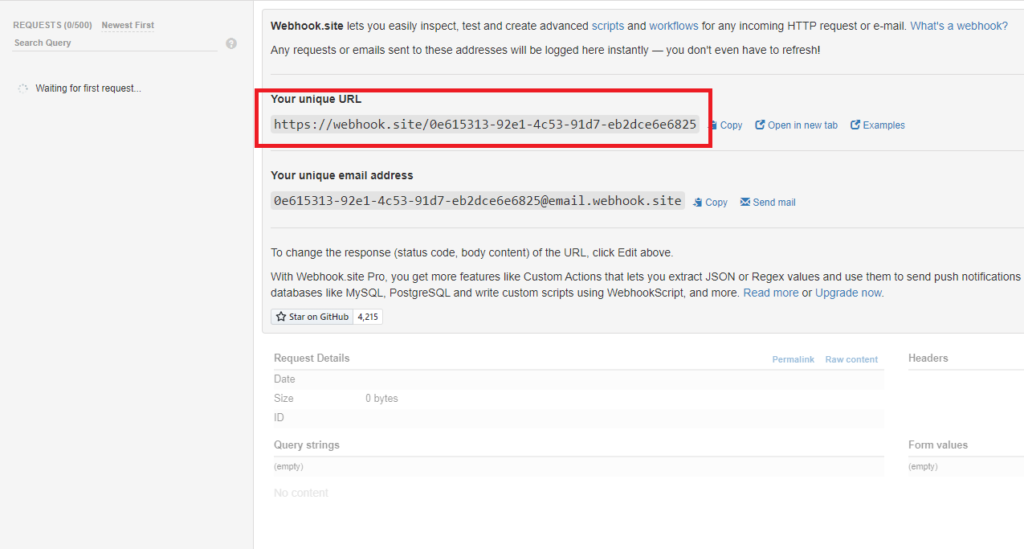

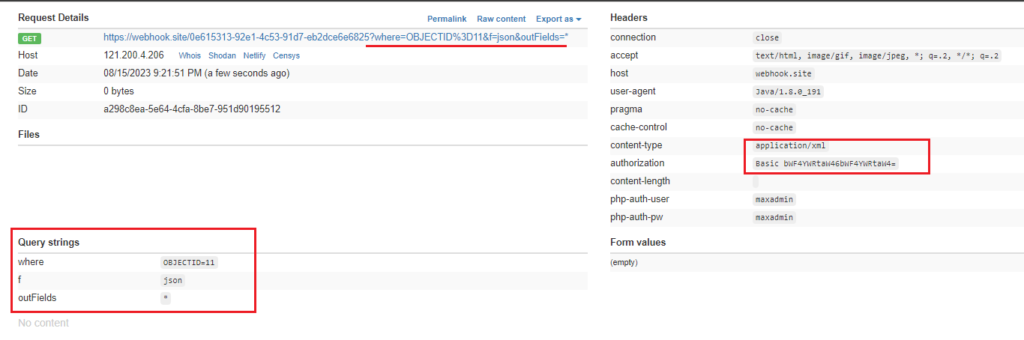

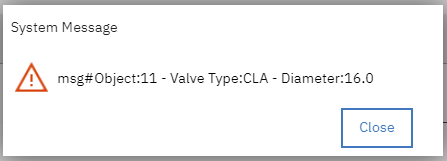

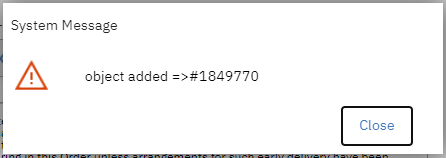

As usual, I test the script by calling it via API. Below is how the data looks when opened in Excel.

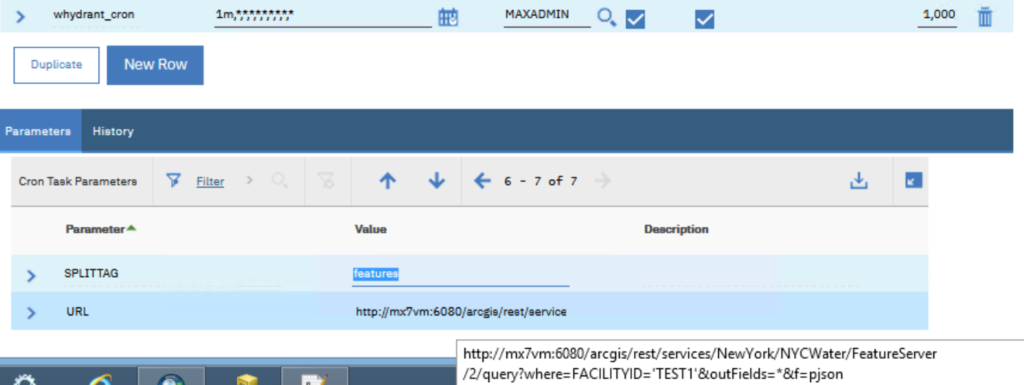

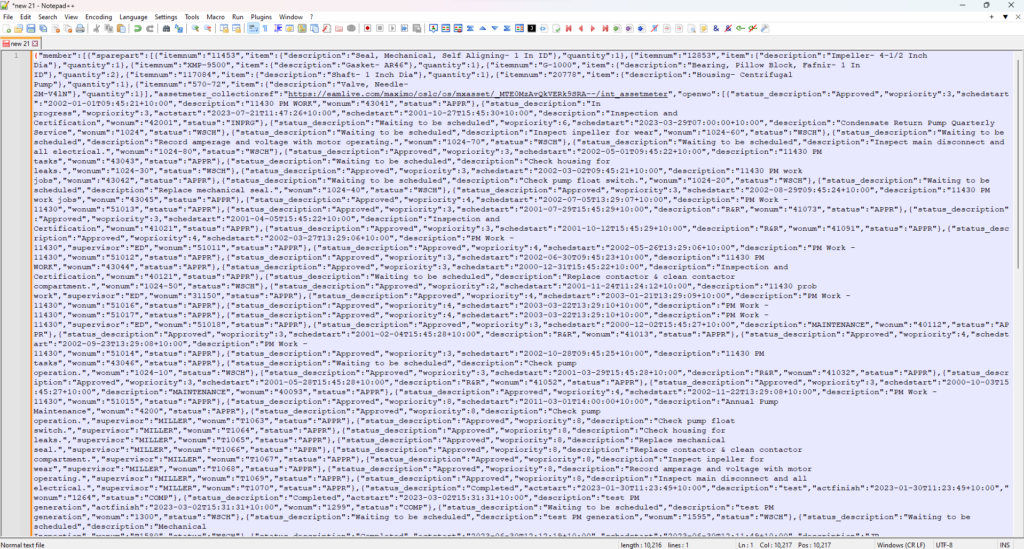

For data aggregation or when complex joins are required, we can also run an SQL query to retrieve data. Below is an example that provides a list of locations and the total number of work orders for each location.

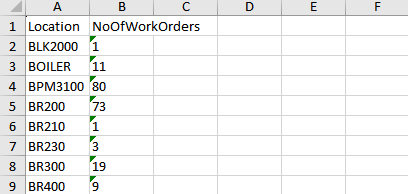

Below is the data exported by the script